Introduction

In this post I want to introduce the concept of continuously planning. A process flow that we established in my team.

Pre Planning – Short Description

One of the key meetings in a scrum cycle is the Pre-Planning.

Usually it’s a long meeting and after that, there are hours of writing subtasks and time estimations.

In this meeting the team members understand the user stories, challenge the PMs and do estimations. Later the developers will decide on solutions and break them into subtasks.

Background About the Team

Team Structure

My team is a cross-functional one. I have frontend and backend developers, as well as more DevOps oriented engineers.

It means that developers sometimes have independent tasks, which are related only to one or two of them.

Products We Own

We are responsible for three major products, and some minor ones.

In one sprint we work on different products. Those products are usually unrelated to each other. Meaning independent tasks.

Team Culture

In the beginning the team was small, as well as the company.

We had a “startup culture”.

Deliver fast, no planning, no estimations.

People hate long, boring meetings. Feel waste of time.

The Problem

As it turned out, there were many problems in the way we tried to work.

There were common issues that were raised in our retrospectives, as well as my personal observations.

Retrospective

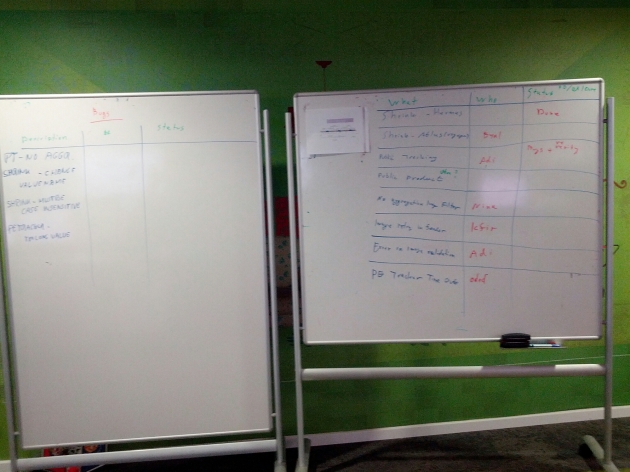

Here’s a list of the main issues we noticed

- During planning, there were long discussions that were relevant to one engineer and the PM (as result of different products and cross-functional team). People were bored and lost concentration

- Many user stories were not even started because we discovered that the PMs did not clarify them. We discovered that in the middle of the sprint.

- User stories were not done because lack of planning

- Overestimated / underestimated sprints content

- Lack of ownership. Features were not done because dependencies issues were not thought in advance.

Solution – Continuous Planning

At some point we started doing things differently. After several iterations, it became the continuous planning.

The idea was simple. I asked our PM to provide user stories of next Sprint when current Sprint starts.

Then I checked them and assigned to the relevant developers or pushed back to the PM.

The developers started looking at the user stories, understood them and added planning using subtasks and time estimations.

So basically we had almost two weeks to plan for the next sprint.

How It Works

User Stories are ready in advance

The crucial part here, is the timing, when are the user story ready?

Let’s say the Sprint duration is two weeks and it starts on Wednesday.

The idea is simple: On Wednesday, when Sprint 18 starts, then PM should already provide all user stories for Sprint 19.

By provide I mean have them:

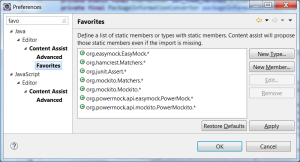

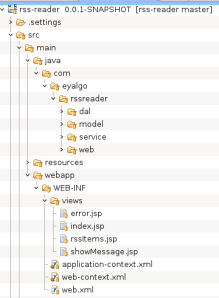

- In backlog, under Sprint 19 (we use JIRA, so we create sprints in advance)

- Well defined (Description, DoD, etc.)

First Check

The user stories are assigned to me and I go over them. I verify that they are clear with proper Definition of Done. I am identifying dependencies between the user stories. Then I either assign the user story to a developer or reassign it to the PM, challenging about the content or priority.

Discovery and Planning By Each Engineer

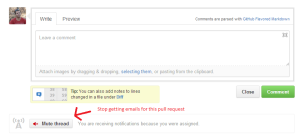

From now on, each engineer, during current Sprint, will start planning the assigned user stories for next sprint. He will talk directly to the PM for clarifications and will identify dependencies.

He has full authority to challenge the user story for not being clear. He can reassign it back to me or the PM.

Collaboration

If there is dependency within the team, like FE and BE, then the engineers themselves will talk about it and assign the relevant subtasks in the user stories.

Estimations

The estimations are part of the planning.

Each developer will add his own subtasks with time estimation.

Timing Goal – It’s All About “When”

The key element for success in this process is timing. Our goal is that all user stories for next sprint will be fully provided in the beginning of current sprint.

We also aim to have all user stories planned (subtasks + estimations) 1-2 days before next sprint starts.

Observations and conclusion

Our process is still improving. Currently around 10%-20% of the user stories still come at the last minute, violating the timing goal.

We also encounter dependencies, which we didn’t find while planning.

Here’s a list of pros and cons I already see

Pros

Responsibilities and Ownership

One of the outcomes, which I didn’t anticipate, is that each developer has much more responsibility and ownership on the user stories.

The engineer must think of the requirement, then design and find dependencies, besides just the execution.

On boarding new team members

New team members arrived and had a user story assigned to them on their first day in the office. So “they jumped into the cold water”, and started understanding the feature, system and code almost immediately.

Collaboration

As each team member is responsible of the entire feature, it increased the collaboration between the team members. There is constant discussion between the team members.

It has also increased the collaboration and communication between developers and PMs.

PM Work

As the PM works harder (see cons), the continuously planning forces him to have better planning ahead.

This process “forced” the PM to have clear vision of 3-4 sprints in advance. This clear vision is transparent to everyone, as it is reflected in the JIRA backlog board.

Visibility and Planning Ahead

The clear vision of the PM is reflected in the backlog (JIRA board in our case), make it more transparent, As the board is usually filled with backlog, which is divided to sprints the visibility of future plan is much better.

Challenges (cons)

PM Work

It seems that the PM has more work. User stories should be ready in 1-2 weeks in advance.

The PM needs to work on future sprints (plural) while answering questions about next Sprint and verifying current sprint status.

Questionable Capacity

When there is a dedicated meeting / day for the preplanning, it’s easier to measure the capacity of the team.

It’s harder to understand the real capacity of the team while the developers spend time on planning next sprint during current one.

Architectural and design decisions

Everyone needs to be much more careful in architectural and design of the system. As each developer plan his part, he needs to be more aware of plans of other developers.

This where the manager / lead should assist. Checking that everyone is aligned and make sure there’s good communication.

Lose Control

The lead / manager has less control. Meaning, not everything passes through him.

If you’re micromanager, you will need to let go.

We identified points were the manager (me) must be involved.

- Dependencies within the team and / or with other teams

- Architectural / design decisions

- System behavior

Conclusion

We established a well understood, simple to follow, clear process.

This process is good for our team. It may be good for other teams, perhaps with some adjustments.

As described above, if

- There are different roles in the team (frontend, backend)

- The team works on different products / projects in the same sprint

- People feel that the pre planning meeting is a waste of time

Then perhaps continuous planning is a good approach.

This post was originally published in our company's tech blog: http://techblog.applift.com/continuous-pre-planning